Note... I'll be in Vancouver, B.C., for next week's LabVIEW Developer Education Days on June 26. I hope to see some of you there.

As I mentioned last time, there's a fourth kind of data that can show up in the profile window...Default Data.

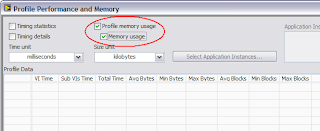

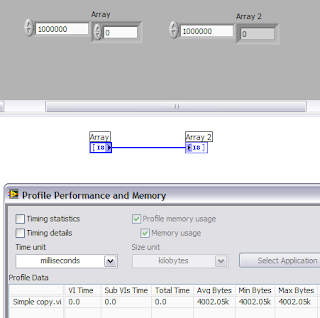

I'll go back to the simple VI I used in the last posting. It's an array of int8's wired to an array of int8's. The default value of each array is empty. This means that when I load the VI into memory, the front panel doesn't have the arrays allocated. (And the VI only takes up about 8 kilobytes of disk space.) For my earlier profiling tests, I was typing a new value into the millionth element of the array control, which allocates the million bytes for it. When I ran this VI, it consumed five megabytes of data.

Now let's see what happens when I go ahead and "Make Current Value Default" for the million-byte array...

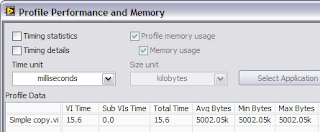

When I run the VI (and I've run it more than once, so you can see the final values in the profile window), you see that the five megabytes has turned into six megabytes. The profile window is now showing you that there's an extra megabyte of memory being consumed by this VI, because of the default data.

To take it a step further, if I also made the indicator array's data the default, I'd be growing the memory consumption to seven megabytes.

Default data is often a good thing, but we sometimes find VIs where we've saved a large amount of data as default accidentally. This is easy to do if you select the "Make All Current Values Default" menu item from the Edit menu. I try to stay away from this menu item, and instead only set the default value for the controls I know that need it.

Pop Quiz: Default data on a front panel control is useful, for example, when the control is on the connector pane, but isn't wired in the caller's diagram. The subVI runs with the default value in that case. When is default data on an indicator useful?

Note that the VI Analyzer reports non-empty default values for arrays so that you can take a closer look at them. (The VI Analyzer is a separate add-on for LabVIEW that can check your VIs for common programming errors, style conformance, and in this case, performance issues.)

Interesting side note... When I save my new test VI to disk, how much disk space do you think it consumes? Seven megabytes? Two megabytes?

It turns out that it takes up about 8 kilobytes, which is about what it took when I hadn't saved any default data. Why is that? It's because my default data was entirely made up of zeros. The VI's data gets compressed when it's saved, and a million zeros compresses very well.

Just for fun, I created an identical VI with a million bytes of random data saved as default data for each front panel array. That VI took about 1.2 megabytes on disk—still, that's a 40% savings over the uncompressed data, which is pretty good, I think. (Your mileage may vary.)

Read more of this article...