Happy holidays, everybody. It's Friday, December 26, and I'm one of a handful of NI Austin employees not taking a vacation today. I got a really good parking spot. :)

A couple of recent blog comments have asked for more thoughts on LabVIEW API design. As it happens, I've been working with a device lately with a LabVIEW instrument driver that I'm not entirely happy with. So I will use this driver as an example to highlight a few points on API design.

To be fair, this driver is actually pretty good. It's a driver for a Hokuyo laser rangefinder, written by one of our interns. I think this is the first driver that we've written for a laser rangefinder, also called a LIDAR. The first driver for a particular type of instrument is hard. (That's why our instrument driver development tools encourage you to start with a driver for a similar instrument if you can.)

I'm revisiting this driver for a couple of reasons... 1) I am trying to extend the driver to support a newer, faster model in the same LIDAR family, and 2) I'm trying to get data from my current LIDAR faster.

If the first driver of a particular type is hard, the second is harder. Once I have two similar, but different, instruments, I have to resolve the differences in a way that makes sense for both devices.

Okay, let's dive into the API...

Not Enough Choices

When Initialize is called, instrument drivers generally query the instrument they are talking to, in order to ensure that it's really the right kind of instrument. Most Initialize functions let you turn this check off, either for performance reasons, or to let you try to use this driver for a different instrument with a compatible set of commands.

Here's the help window for the Hokuyo URG-04LX instrument driver Initialize VI...

There's something missing that just about every instrument driver Initalize function includes--the "ID Query" Boolean input. ("ID" as in "Identification".)

This latter example is what I was trying to do. I had a new model of Hokuyo URG LIDAR that I wanted to talk to. As expected, the instrument driver failed on the Initialize, since the identification string returned from the LIDAR didn't match an expected value.

Why wasn't the ID Query Boolean included on the Initialize VI? I don't know. I can imagine that the author didn't feel confident that the code worked with anything more than the specific hardware he or she tested. But I still would have included the Boolean, to make it easier for end users to try anyway.

Too Many Choices

There's another input on Initialize labelled "Protocol"... This LIDAR has two slightly different ASCII command sets that it can support--version 1.1 and version 2.0.

This LIDAR has two slightly different ASCII command sets that it can support--version 1.1 and version 2.0.

How does a user know which to choose? Let's see what the help says for this input... Hmm. Sounds like 2.0 is better. Why not default to that? Why give the user a choice at all?

Hmm. Sounds like 2.0 is better. Why not default to that? Why give the user a choice at all?

I can think of two reasons. First and most importantly, early units of this model of LIDAR had firmware that don't support SCIP 2.0. So, if we assume that SCIP 2.0 is available, this driver wouldn't work with those units. Second, the SCIP 2.0 protocol uses a few more bytes per command than the SCIP 1.1 protocol, and thus might be slightly slower in certain uses.

But I think we should not expose this protocol choice to users. Here's my reasoning... 1) The only users of this LIDAR that we know are using it with LabVIEW have the latest firmware, thus they wouldn't be affected by depending on 2.0. 2) The firmware is field-upgradable, so the user could update the LIDAR to be compatible with the driver. 3) The newer model of LIDAR I'm using only supports the 2.0 protocol, so it's confusing to have the option for 1.1 when it won't work on some devices. 4) The extra bytes for the commands don't seem to have a measurable impact; the measurement time of the instrument appears to be the gating factor on performance. 5) Dropping support for the old command set lets us remove almost half the code in the driver. Most VIs have a case structure for the 1.1 and 2.0 cases. We can now remove those case structures and just leave the 2.0 code inline.

Another couple of minor nit-picks as long as I'm showing you this front panel for Initialize... I would have hidden the digital displays for baud rate and protocol. The menu ring's label says 19200, so I don't need to see that the data value is also 19200. And the protocol data value of "1" is used only internally and doesn't need to be exposed to the user.

Giving Users Choices

I seem to have come up with two contradictory examples about giving users choices. For ID Query, I'm complaining that the API designer didn't give me the choice to turn it off. For the protocol, I'm complaining that the API designer gave me a choice I didn't want to make.

I think it all comes down to a judgement call. What are the chances that someone is going to want to use one of Hokuyo's other models of LIDARs? High, I think. What are the chances that somebody really must use the 1.1 communications protocol. Low, I think.

If the API designer really wanted to support both communications protocols, I would insist that he or she document how to choose between them. Which leads to a related issue in the driver...

Documenting Expected Usage

One of these LIDARs can use serial to communicate. (Both can use USB.) With serial, one of the parameters you have to configure is the baud rate--the communication speed of the device.

Most serial devices use a baud rate configured through either DIP switches, or through a front panel menu that stores the rate in non-volatile storage.

The Hokuyo URG-04LX uses a command sent through the serial port to configure the baud rate for the serial port. This is a classic "chicken and egg" problem. I have to use the serial port to configure the serial port.

When this device powers on, it defaults to 19,200 baud. If I want to transfer data at 115,200 baud, I have to connect at 19,200, then send a command to tell the LIDAR to communicate at 115,200 baud, then change my own connection to 115,200 before continuing the conversation.

Now suppose I finish my program and want to run it again. What should the initial baud rate be? In this case, I have to know that when I reconnect to the device, that it is already configured to 115,200 baud. (Or I have to reset or power-cycle the LIDAR in between programs, to revert to 19,200 baud.)

This can get pretty confusing, which is why my most devices configure their baud rates through physical switches.

Back to the API, there are two places where baud rate can be configured. First is on Initialize, as shown above. As I learned by studying the block diagram of Initialize, this baud rate is used for the initial communications to the instrument.

The second place you configure baud rate is with a configuration VI...

As I learned by studying the block diagram of this VI, it sends a command to the LIDAR to configure its serial baud rate. It does not change the baud rate of the host side.

So I think the expected usage is that you would call Initialize to establish the initial connection, then Configure Serial Baud Rate to increase the LIDAR link speed, and then use a VISA property node to change the host link speed. And I think you have to put in a short delay after that to get things to settle down after the baud rate change on the LIDAR.

Suggestion #1: Document this! Don't make every user have to figure this out on their own. If nothing else, make an example. It may not be a common use case, but if it's non-intuitive, an example or a little bit of documentation can be very helpful.

Suggestion #2: If two steps should always happen together, combine them. I modified the Configure Serial Baud Rate VI to also change the local baud rate and add the delay. That way, the end user doesn't have to remember to do this. I also thought about moving all of this into Initialize (which would take two baud rate inputs--initial, and desired). I voted against this, since there might be a use case where you just want to change the baud rate after you've already initialized.

Making Changes

So what's going to happen to this instrument driver? For myself, I've hacked together an instrument driver that does what I want for my two LIDARs. Now, I'll be asking someone to go back in and make the edits the "right way", now that we know more about what the "right way' is. We'll then feed this update back onto the Instrument Driver Network for others to download and use.

Friday, December 26, 2008

LabVIEW API Design: A Quick Case Study

Posted by Brian Powell at 9:23 AM 2 comments

Wednesday, November 26, 2008

A Quick Update

I'll be traveling to Albuquerque and Denver next week for the NI Tech Symposia. If you live in New Mexico or Colorado, I hope to see you. (Dec. 2 for Albuquerque, Dec. 4 for Denver.) For more info... http://www.ni.com/seminars/.

I'll be presenting "What's New in LabVIEW 8.6", "Software Engineering Best Practices for LabVIEW", and "Using IEEE-1588, GPS, and IRIG-B for Multi-System Synchronization".

This weekend before the Albuquerque NI Tech Symposium, I'll be making my annual trip to the Bosque del Apache National Wildlife Refuge for some bird photography. Let me know if any of you are hanging out there.

A quick update on our employee giving campaign. (I got a lot of positive feedback on that post, by the way.) In these tough economic times, we managed to increase the total dollars pledged, as well as the number of donors. I am proud that NI employees recognize that non-profits need us now more than ever, and that we were able to step up and help meet those needs. I know of only one other Austin high-tech company that grew their campaign dollars this year.

For me personally, it was an emotionally rewarding experience. I'm glad the "Why me?" of heading the campaign turned into a "Yes".

I've got some more blog posts in my head, and I'll be trying to find the time to get them out. Read more of this article...

Posted by Brian Powell at 10:52 AM 0 comments

Monday, October 06, 2008

Giving Back To Our Communities

On Friday night, I went to the opening night performance of a local non-profit choral group, Conspirare. They are an amazing, world-class group, and they put on a wonderful show that will soon be recorded by PBS. I even recognized in the audience Dr. Anton Armstrong, conductor of the renowned St. Olaf Choir.

Sitting in the audience, watching and listening to Conspirare, I could not help but think that this is an organization worth supporting. How fortunate we are to have them in Austin.

Today marks the beginning of our three-week employee fall giving campaign, of which I am the chair.

A few weeks ago, I received a mysterious meeting invitation in my inbox... "Quick chat with community relations". I had been warned that I was on the list of candidates to chair this year's campaign, so I knew what the meeting was going to be about. This gave me time to think about it.

At first, it was a "why me?" kind of experience. What was I signing up for? It's overwhelming and intimidating—we've got thousands of employees in the US, and I would be the voice of the campaign.

We've got a pretty good track record of employee giving. High standards, yet the economy is tough this year.

Sigh. I'm not sure I'm the guy for the job. It would be so easy to say no; to push this off on someone else.

But I don't. I won't.

I care about the community in which I live. I care about NI, and feel privileged to work here. I care about the arts. I care about education. I care about animals and the environment. I care about social needs, health needs, literacy needs.

With the consummate support of the National Instruments Community Relations team, especially Yvette Ruiz and Amanda Webster, here I am.

We hire a lot of our employees right out of school. This is great; keeps us young. It also means that some of our employees may not yet feel strong connections to their communities. And this is one of my challenges.

I asked for a field trip. I asked our community relations people if we could organize a field trip for our campaign volunteers.

I wanted our volunteers to see a need firsthand. We have dozens of volunteers, without whom we couldn't run this giving campaign. They go to every group meeting and explain the reasons for the campaign, the goals, and the mechanics.

I wanted our volunteers to see where the money goes, and what it buys, and to be able to talk about it to our employees.

We went to SafePlace, which fights domestic violence and sexual abuse.

A few days later, a different group of NI employees went to Dell Children's Medical Center of Central Texas.

And in the past few weeks, I've been to events for Communities in Schools, and the Austin Lyric Opera.

We've got thousands of non-profits in central Texas, thousands more nationwide, and our employees can choose to whom they donate. I want all of our employees to know that they can make a difference in their communities.

Another theme I want to stress is that any amount helps.

I am truly proud that NI has the largest number of employees who are able to donate $1000 or more to be a part of the United Way Capitol Area Young Leaders Society.

But many of our employees aren't in a position to donate that much. I want them to know that they can still make a difference, even with a small donation.

Among other things going on in my life right now, I'm raising money for cancer research and survivorship through the Lance Armstrong Foundation. I'll be doing the Livestrong Challenge bike ride at the end of October. (Plug: Support my ride here... austin08.livestrong.org/bhpowell.) One of my friends came up to me the other day and handed me a dollar bill in support of my ride. It's all he had to give. He promised another dollar in a week. That meant so much to me, as it also reminded me of a parable that I am sure many of you know.

My challenge to all of you readers, wherever you are, whoever you are, is to go out and make a difference in your community. Find your passion. Give your time. Give your money. Find someone who needs your support, and support them.

Read more of this article...

Posted by Brian Powell at 9:00 AM 1 comments

Thursday, October 02, 2008

AutoTestCon 2008

As I mentioned in an earlier post, I was at AutoTestCon in Salt Lake City a few weeks ago. This was my first visit to this MIL/AERO conference and tradeshow.

I was greeted to the Salt Palace Convention Center by banners with images of fighter jets and bombers over Monument Valley and Delicate Arch.

I was there to give a presentation on IVI, be part of a panel discussion on LXI, and to support a demo for the LXI Consortium...

Here's a photo of the demo I built for the consortium, using a Rohde & Schwarz FSL Spectrum Analyzer, a Keithley 2910 RF Signal Generator, two Agilent E5818A Trigger Boxes, an NI 8353 quad-core 1U PXI Controller, and NI LabVIEW 8.6.

The other demo in the booth consisted of Matlab and image processing software from The Mathworks, Agilent E5818A Trigger Boxes, and 1394 cameras from Point Grey Research. Colloquially called "the bouncing ball demo", it used a military grade Playskool Busy Basics Busy Popper for projectile measurements.

Okay, the part about "military grade" was a joke. The demo was there to make noise and entice people into the booth. Shown in the photo are Conrad Proft, from Agilent, and Rob Purser, from The Mathworks.

Both demos showed LXI features such as IEEE 1588 timing and distributing triggers across a network.

On Monday, I gave a presentation about IVI instrument driver technology at one of the seminars. This was just a basic introduction into what IVI drivers are, and an update on the status of current work in the IVI Foundation.

The tone was set for this presentation a few seconds into my talk. One attendee raised his hand and said, "IVI drivers don't work."

"I see it's going to be a tough crowd", I replied.

In this particular case, the user had obtained several IVI-COM drivers from his instrument vendor, and all but one failed to communicate correctly with his instruments. He had also received an IVI-C driver for an instrument from a different vendor, and he was unable to make it work until he got National Instruments involved to fix it for him.

This is a good point for me to point out that you don't have to depend on your instrument vendor for your instrument drivers. The National Instruments Instrument Driver Network (IDNet) contains instrument drivers for thousands of instruments from hundreds of different vendors, including IVI drivers, LabVIEW Plug and Play drivers, and VXIplug&play drivers.

Many of the drivers on IDNet are marked as "NI Certified", which means...Certified instrument drivers comply with instrument driver standards including programming style, error handling, documentation, and functional testing. Certified drivers ensure consistency among instrument drivers and, therefore, improve ease of use. They also provide source code so that you can modify, customize, optimize, debug, and add functionality to the instrument driver. All National Instruments certified instrument drivers receive NI support.

I was also on a panel discussion about LXI. We presented to about fifteen people.

A week later, we recorded a webcast for T&M World Magazine with the same material. You can view a replay of the webcast by registering on the T&M World website.

We had time for questions and answers during both versions of the presentation. Additional questions from the webcast will eventually be posted with answers on the LXI website.

Interestingly, most of the questions at AutoTestCon related to GPIB. Conrad Proft, from Agilent, had slides that showed examples where LXI works better than GPIB (over long distances) and RS-485 (cabling).

One question was whether companies are going to continue to support GPIB. Conrad from Agilent voiced his company's continued commitment to GPIB. I added that I believe that many people are still building GPIB-based test systems, and that NI will continue to support our GPIB users. (Later that week, NI announced our new PCI Express GPIB controllers that support a nearly 8 megabyte/second transfer rate, are RoHS compliant, and use only 1.1W of power.)

Another audience member, perhaps a little caught up in the excitement of LAN-based instrumentation, asked, "Why would anyone still use GPIB? It's slow. The cables are so inflexible."

My jaw dropped at this. I bet if you polled all the AutoTestCon attendees, just about every one of them is using GPIB, and is going to continue to use GPIB. So my answer began with, "Because it just works!".

"The GPIB cables are shielded and have rugged connectors that screw in and don't have plastic tabs that break off."

[Bringing it back to LXI] "...the message of the LXI Consortium is that it's important to ensure that LXI works well with other buses."

The reality is that our users are going to develop "hybrid" systems, using a mix of bus technologies. Every bus has pros and cons. GPIB has low communications latency and is rugged. LXI has cheaper cabling and works well over extraordinarily long distances. PXI and PXI Express have high communications bandwidth and low latency.

And that's why it's my job at National Instruments to help ensure that all forms of I/O work well in LabVIEW.

Posted by Brian Powell at 12:30 PM 0 comments

Labels: Agilent, AutoTestCon, Instrument Driver, IVI, IVI-C, IVI-COM, LabVIEW, LXI, Mathworks, Matlab, Playskool, Rohde Schwarz

Tuesday, September 02, 2008

AutoTestCon 2008 in Salt Lake City

I'll be in Salt Lake City next week, September 8-10, for AutoTestCon.

AutoTestCon is a large Mil/Aero conference and trade show sponsored by the IEEE.

I'll be doing a couple of presentations...

On Monday, I'm presenting as part of a seminar entitled, "VXI, PXI, IVI & LXI Standards Improve ATE Systems Design". I will be presenting the part about IVI. I'll cover what IVI is, the current state of IVI, future work, as well as technical informationi on configuration, the differences between IVI-C and IVI-COM, and how to use class and specific drivers together.

On Wednesday, I will be part of a panel discussion on "Test Applications Using LXI Instruments". Hosted by Test & Measurement World chief editor Rick Nelson, the panel plans to share some of what we've learned over the past three years creating multi-vendor LXI-based test systems.

Posted by Brian Powell at 9:22 AM 0 comments

Labels: AutoTestCon, IEEE, IVI, IVI-C, IVI-COM, LabVIEW, LXI, PXI

Monday, August 04, 2008

NIWeek 2008

It's Monday of NIWeek 2008, so it's a tradition for the Austin American-Statesman (the local newspaper) to have a nice article about NI. Today's was about Green Engineering.

Today is Alliance Day, and the full-blown conference starts tomorrow.

I'll be presenting again this year, on Thursday...

My topic is about using NI hardware and software products to work with LXI-based test systems.

I'll be around the convention center all week, including the LAVA dinner Tuesday night, and the conference party on Wednesday. Feel free to find me and say hi.

Posted by Brian Powell at 2:28 PM 2 comments

Tuesday, June 24, 2008

Thoughts on Network Protocols for Instrumentation

Among my other responsibilities, I still hang out with the LXI crowd. Lots of conference calls, and a quarterly face-to-face meeting. (Thank you, TestForce, for hosting our recent meeting in Toronto.)

Following up on my earlier blog post, National Instruments did join the LXI Consortium at some point (last year, I think). I believe that having one network-instrumentation standard to follow is better than having many, and the technical side of the consortium is constantly working to devise solutions to limitations inherent in a network-based test system.

There are a variety of challenges revolving around the fact that LAN opens up the door to having more than one "controller" in a test system.

In a test system using RS-232 or USB, for example, any single device talks to only a single "controller"—typically the test system's PC. A GPIB system is a form of network, but there's always one "controller in charge". Nobody can easily intrude into the test system.

Is this a "feature", or a "limitation"? It all depends on the test system. What if you wanted to see test data from the outside world? Today, you'd probably put a network connection on your test PC, and let it serve up data through the LabVIEW Web Server, or store data into a corporate database. Nobody could talk to the instruments directly; they would always go through the PC.

But an alternative that LXI allows is to connect your private test network to the rest of your company's intranet (or the entire internet, if you want). This allows others to interact directly with the measurement instruments. You probably wouldn't want this feature in all test systems, but it opens the door to some interesting possibilities.

Even if you don't connect your test network to the internet, you might still want to have more than one test PC talking to the devices. Or one grand vision of the consortium is that instruments can control other instruments. How does an instrument manage multiple connections from multiple controllers at once? Does this sound complicated? It is.

And this is where the LXI Consortium comes in. What can the consortium do to make it "safe" to have more than one controller on your network?

There are many aspects of this that the consortium is working on—defining behavior when there are too many connections for an instrument to handle, for example. One particularly challenging problem is how to "reserve" or "lock" an instrument in your test program so that nobody else comes in and changes settings in the middle of your test.

It's worse than that, really. Just discovering instruments on your network can have the accidental side effect of interrupting a test program. And it's especially this kind of inadvertent access to a test instrument that the consortium is trying to resolve.

One step in this direction was announced in the LXI 1.2 standard. There will be a new mechanism for discovering LXI devices that is less obtrusive than the current approach. In the future, when instruments are available that support the next version of the LXI standard, this very common form of inadvertant access should be solved for future test systems.

But there are still challenges with test systems with more than one computer or instrument trying to talk to a single device at the same time. For that, we really need to be able to reserve the instrument for exclusive use by a single test program (or perhaps even a single part of a larger test program).

This problem has been solved before in this domain. In 2004, I helped write an article for T&M World entitled Migrating to Ethernet. I discussed the VXI-11 protocol for communicating with instrumentation over Ethernet.

Because VXI-11 defines processing on both the host (PC) and client (instrument), it is able to provide a way to reserve or lock the instrument in a test program. With VXI-11 devices, the VISA commands viLock and viUnlock can be used to lock the instrument so that it only responds to the program that has the lock.

However, VXI-11 has kind of fallen out of favor with instrument vendors. One reason is that it requires a fair amount of processing horsepower inside the instrument. VXI-11 is based on a technology called RPC, or Remote Procedure Calls. With RPC, much of the processing happens on the instrument, requiring more processing power, more memory, and thus, higher overall cost.

Instrument vendors want to move to simpler protocols. The consortium therefore needs to take a fresh look at instrument locking, and hopefully come up with a proposed solution in the 2009 or 2010 versions of the LXI standard.

Until then, fortunately, most LXI instruments continue to support VXI-11 and you can use viLock or viUnlock (in LabVIEW, they are called "VISA Lock Async" and "VISA Unlock") to lock instruments in your test system.

One caveat is that IVI drivers do not support the same kind of locking. You need to have access to the VISA session to properly lock the device. This is one of the benefits of using a LabVIEW Plug and Play (or for C users, VXIpnp) driver. Since these kinds of drivers use the VISA session directly, you can easily add VISA Lock and VISA Unlock calls to your program without major rework.

Stay tuned as the standards evolve.

Posted by Brian Powell at 4:00 PM 0 comments

Monday, May 05, 2008

64-bit LabVIEW

Last week, we announced the 64-bit LabVIEW beta. That announcement reveals a little about how I've spent the last couple of years of my life.

A long, long time ago, when I first started at NI, we were pretty proud of the fact that LabVIEW started its life as a 32-bit Macintosh application. We didn't suffer the pains of some applications that were having to live in (and later convert from) the 16-bit DOS and Windows environments.

But in some parts of the source code, we weren't as rigorous with our data types as we should have been. I asked the guy next to me, "Hey, Steve. Should I be worried about all these places we assume pointers fit into 32 bits?"

"No," he responded, "we do that all over the place. Somebody far in the future will have to go through all of LabVIEW and fix that."

I did not know then that the "somebody" would be me.

As I alluded to in my Twenty Years post, when I first started working on this, it seemed like an insurmountable task. LabVIEW source code is big, with a lot of developers having their hands in the code over time. And it's part GUI, part compiler, part runtime engine, and part kitchen sink.

Fortunately, I soon got help—a small, but really good team—and we just started plugging away at it. The little milestones along the way...

- Compile the source code without errors

- Compile, link, and crash on launch

- Launch to Untitled 1

- Launch to the Getting Started window

- etc.

There was a snowball effect—we'd fix something, and then a whole bunch of stuff would start working.

I wouldn't say it's been easy. When we first started, I had a fair amount of skepticism... I had fears that we would hit a brick wall, or we'd discover something that would require more effort than we were willing to invest. That skepticism was justified. We had to make some tradeoffs to keep the project from getting out of hand.

From a software engineering perspective, I think we did a good job of containing the risk to 32-bit LabVIEW while pushing forward with 64-bit LabVIEW. (All the code is shared.) Perhaps more on that later.

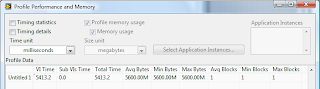

I'm pretty happy with our level of quality in this beta release. If you've got access to a 64-bit Windows machine, I encourage you to sign up for the beta and give it a try. Here's a teaser for the kinds of things you'll be able to do. (Click to enlarge the image.)

Read more of this article...

Posted by Brian Powell at 10:46 AM 9 comments

Labels: LabVIEW

Thursday, February 21, 2008

Twenty Years

Last month, I celebrated twenty years at National Instruments.

One of the first things I learned about software development at NI is that food plays a prominent role. Back in the early days, it was mostly Double-Stuf Oreos®.

Hardly a day goes by without someone sending out a food announcement email—bagels, doughnuts, leftover party food. There's almost always a reason for the food: an anniversary, or "Thanks to Kevin for helping me figure out this problem", or "I broke the build".

During one test day, someone wrote a LabVIEW application that sits in the system tray (multi-platform, of course) and pops up to let you know that someone has brought in food. It also showed you the shortest path from your desk to the food. Who says testing isn't fun?

For my fifteen year anniversary, I bought fifteen dozen Krispy Kreme® doughnuts and scattered them around several floors of the building I'm in. It's frighteningly easy to buy 15 dozen doughnuts. They didn't bat an eye. They did offer to help carry them to my car.

For twenty years, I made a couple of desserts, both from the Hotel Limpia (Fort Davis, Texas) cookbook. I made the most decadent chocolate brownies ever—containing about 20 pounds of chocolate and sugar. And in a feeble attempt to provide enough for everybody on the team, I made dozens and dozens of oatmeal raisin cookies.

But enough about food. That's not what keeps me coming back to this place every day.

Let's tie this all back to software engineering.

While software engineering is mostly about the process of developing software, one aspect of it has to cover how you get people to come into the office every day and do the work. Food is nice. Pay is important. But it's the cool projects that keep me coming back.

I'm working on a long-term project that I sooo want to be able to talk about. (Soon, soon.) I've been working on this project for a couple of years, and I still come in to work each day eager to work on it. What seemed like an insurmountable problem when I started is now within reach, thanks to a small and really good team we've put together.

A Sense of Urgency

There's a lot to be proud of as I look back over twenty years, but I come to work every day thinking about what's next. And maybe that's something to be proudest of—I helped build a software development process that is still one I want to be part of after twenty years.

I come to work every day with a sense of urgency. I think all good software teams do. It's not a sense of panic. Okay, maybe it occasionally approaches panic, but mostly it's under control. It's more wanting to relentlessly make progress, every day. A little bit more works every day, and soon we've worked past major obstacles. Celebrate briefly. And we keep going, because we're not done yet.

It's a whole lot like twenty years ago on the LabVIEW team. And that's a very good thing.

And my project is one of many. There are many other small teams here working on exciting things, with their own sense of urgency.

So I think it's pretty cool that after twenty years at the same job, I'm still having fun. We haven't run out of things to do. We haven't run out of ideas. We aren't "done" yet.

Read more of this article...Posted by Brian Powell at 9:36 AM 8 comments

Labels: LabVIEW